Liquid cooling in data centers

increasing efficiency and necessity for future viability

Introduction

This article provides an introduction to the world of liquid cooling in data centers. It lists the advantages and challenges over conventional air cooling, briefly explains its thermodynamic principles and compares different liquid-based cooling concepts. This is followed by a categorization of the area of application and what innovations can still be expected in this area.

Previous applications of liquid cooling

Although it may seem like liquid cooling is the newly discovered star in the IT cooling sky, this technology has been playing an important role for many years. In fact, everyday life is indirectly influenced by water-cooled technology: liquid-cooled high-voltage transformers have been used in power supply for around 200 years and liquid-cooled combustion engines have also become indispensable in the automotive sector1.

IBM’s System/360, the first liquid-cooled computer, appeared in 1964. In the 1980s, liquid cooling was very popular for supercomputers. However, technology went through a series of ups and downs until the early 2000s, when it began a steady upward trend. This is due to the rapidly increasing power densities required by high performance computing, artificial intelligence and crypto mining. In the last decades, the consumer PC market has also been conquered by liquid cooling sets2. Their focus here is on silent cooling, efficient overclocking and, colorful RGB lighting3,4.

Purpose of liquid cooling

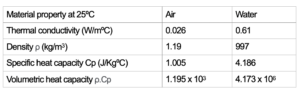

Liquid cooling offers numerous advantages over conventional air cooling, which are both economically and ecologically significant. When talking about liquid cooling, we often implicitly talk about water cooling. A fundamental physical relationship illustrating liquid cooling’s superiority lies in the combination of high density and high heat capacity of water (Table 1). Compared to air, water can capture and transport a considerably greater amount of heat per unit volume. This enables more efficient heat dissipation.

Table 1: Comparison of the material properties of the thermodynamics of air and water

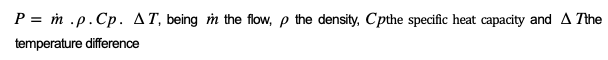

This results in a lower mass flow that has to be moved in order to achieve the same cooling capacity (Figure 1). As a result, less energy is required to operate the cooling system, which leads to improved energy efficiency. Less moving mass also means less effort for mechanical elements, which in turn leads to lower operating costs.

Figure 1: Volume flow of air and water with the same heat transfer

Water is a phenomenal fluid for heat extraction but not the only one that is being employed. If we consider other fluids used in liquid cooling, their volumetric capacity remain superior to that of air due to the higher density of these liquids, some of them even higher than water (e.g. HFE 7100 density is 1510 kg/m3). Another significant advantage of liquid cooling is the ability to maintain a higher temperature level in the cooling circuit. Following the previous illustrations, the dissipation of a quantity of heat at a certain temperature assuming similar heat-exchanging surfaces will allow lower delta T. While air-cooled data centers are operated at an air temperature of approx. 27°C, it is possible to operate a liquid cycle for server cooling between 45°C and 60°C, as discussed below. This has two advantages. On one hand, the higher temperature level returning from the cooling circuit enables a greater proportion of free cooling. At low ambient temperatures (lower than 16 ºC5), water cooling can be operated without energy-intensive chillers, which leads to considerable energy savings and investment costs.

On the other hand, it enables the effective utilization of waste heat, diverting the heat from cooling towers and dry-coolers to surrounding heat demands. The recovered heat can be used, for example, to heat buildings or supply processes requiring thermal energy, resulting in better resource usage and cost savings on the data center (no need for additional artificial removal of the heat) and heat demand sides (no need to generate heat) (see our past blogpost).

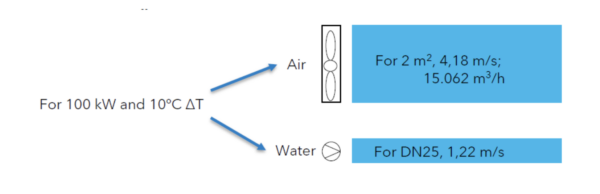

For classification purposes, the following are the power density values that different cooling methods can cope with:

Table 2: Rack power density through different cooling methods

Cooling fluids

Water-based liquids

Water-based liquids are used for indirect and direct to chip liquid cooling, with de-ionized water being used in cooling circuits to practically eliminate their electrical conductivity and potential chemical corrosion effects produced by ions and minerals present in the water. Furthermore, corrosion inhibitors and biocides are added to the cooling liquids to protect against electrochemical corrosion of the system components and to inhibit the growth of organic matter. For outdoor cooling circuits, e.g. for free cooling, antifreeze must also be added (ethylene/propylene glycol) to prevent the coolant from solidifying and causing frost damage at temperatures below 0 °C. It is becoming more common to find the antifreeze propylene glycol (PG) within the primary cooling circuits at a server level, because it helps to stabilize the fluid and requires less treatments6. Since water is the source of life, it can be challenging to keep water stable and clean, particularly when heated in a non-uniform way (some servers on, others off, some hotter than others) within a closed loop in contact with different metals and plastics.

Immersion fluids

In contrast to cold plate cooling, with immersion cooling the server is completely immersed in a special cooling fluid. The cooling fluids are thermally conductive and dielectric (electrical non-conductive) liquids, which can be divided into four subgroups7: Mineral oils; de-ionized water; Perfluorocarbon-based fluids and synthetic fluids.

The choice of fluid depends on various factors. Mineral oils, and possibly in the future de-ionized water (some research has been done with power electronics but not with hardware yet8), are more suitable for single-phase systems while perfluorocarbon-based and synthetic fluids are more suitable for cooling systems with phase transition. The exact functioning of these techniques is explained further below. Other aspects also have an influence on the choice of coolant. Some immersion fluids have a high GWP (Global Warming Potential), while others are toxic.

Liquid cooling in white space

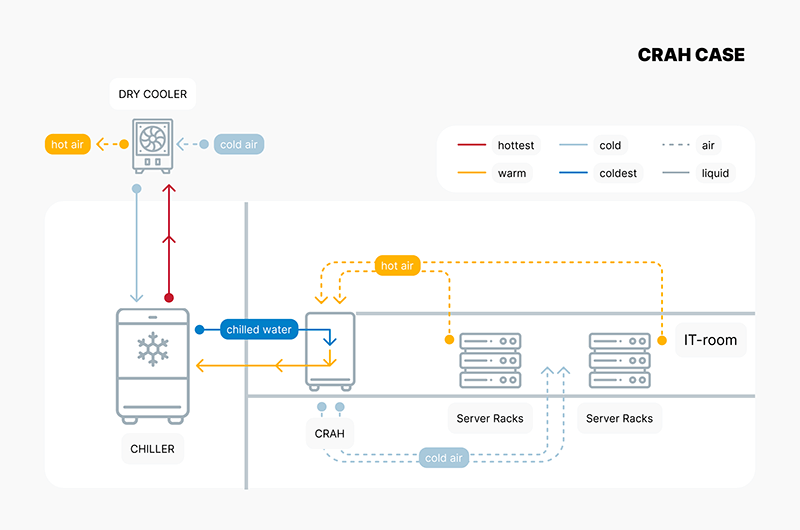

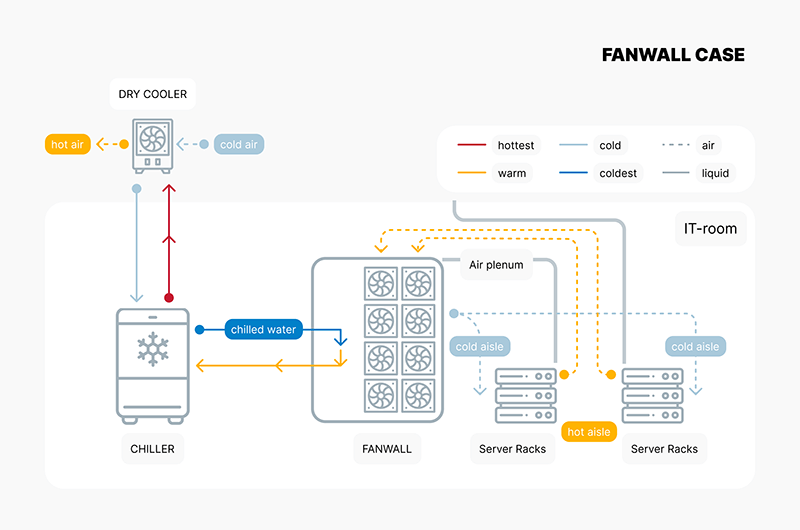

Indirect liquid cooling

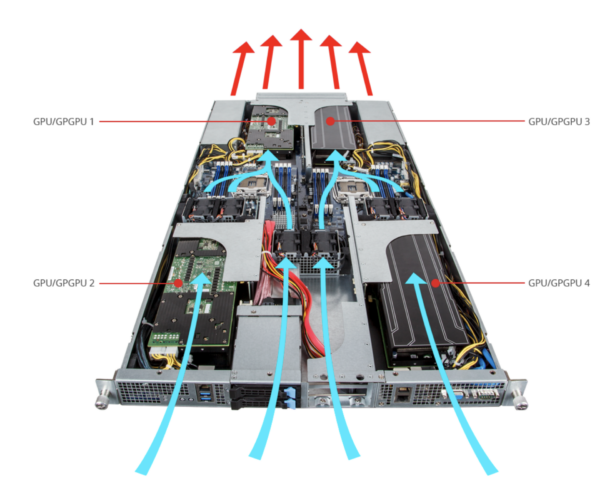

With indirect liquid cooling, the waste heat from the servers is transferred directly from the air to a liquid (generally a water-based liquid) at the rack and then transported out of the white space. Classic air-cooled servers reject the heat with air volume flow through the server using their internal fans. Cold air is flown into the IT-room to absorb the emitted heat from the chips, hard disks, RAM, power supply units, etc., via heat sinks and their outer surfaces, and is blown out at the rear of the server at a higher temperature (Figure 2).

Figure 2: Air flow of an air cooled GPU-Server9

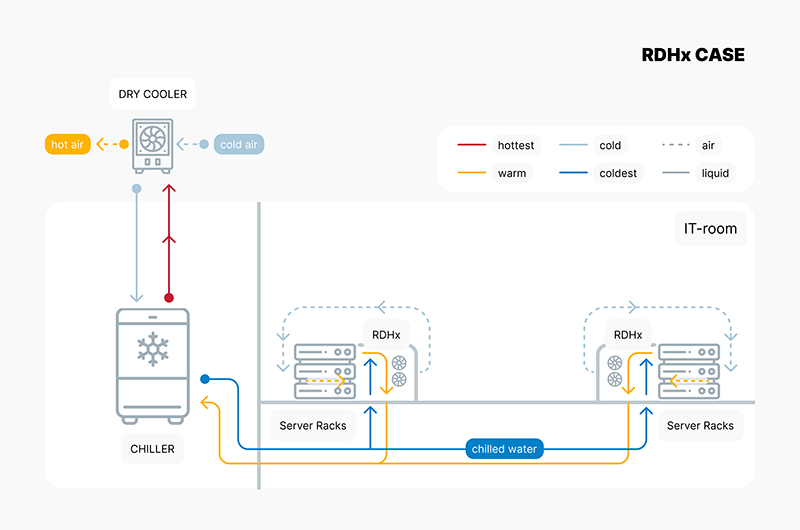

In the case of CRAHs and fanwalls, these devices are placed at the perimeter of the IT space or integrated in its walls. In the case of rear door heat exchangers (RDHx), air-liquid heat exchangers fitted with fans, are installed at the rear of the racks (Figure 3). A coolant with a temperature generally between 18 °C and 27°C10 flows through their heat-exchanging coils cooling down the warm air from the servers, allowing cooling capacities of up to 78 kW/rack11 to be achieved12.

Figure 3: Rack with rear door heat exchanger13

In contrast to typical air-cooled server rooms, the hot air is cooled down to the temperature level required for the server entry directly as it exits the rack. As a result, this cooling system does not generate any warm air outside the rack needing to be routed separately from the cold air, thus eliminating the need for an enclosure for separate air routing. A Coolant Distribution Unit (CDU) can be employed to separate the closed cooling circuit from a secondary one connected to the chillers’ facility and to monitor and control the distribution of the coolant to the rear door cooler (at a rack level). Depending on the temperature difference between the return coolant and the outside of the data center, free cooling can be used to dissipate heat to the environment instead of needing a chiller.

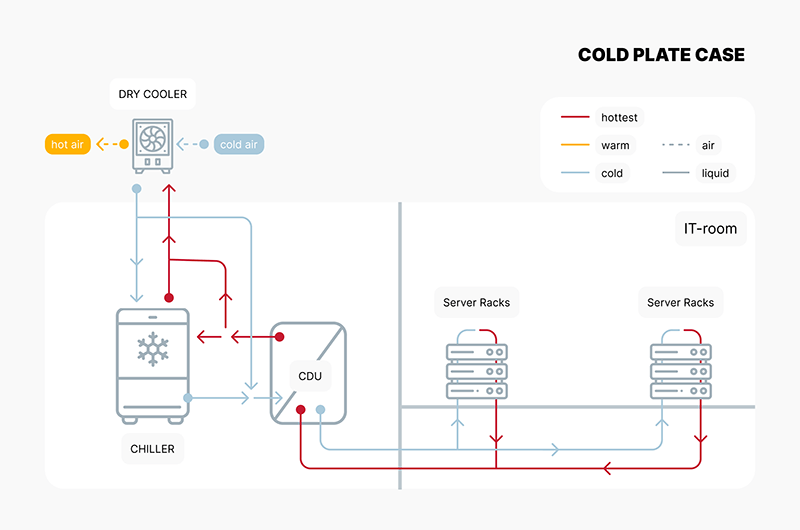

Direct to chip liquid cooling

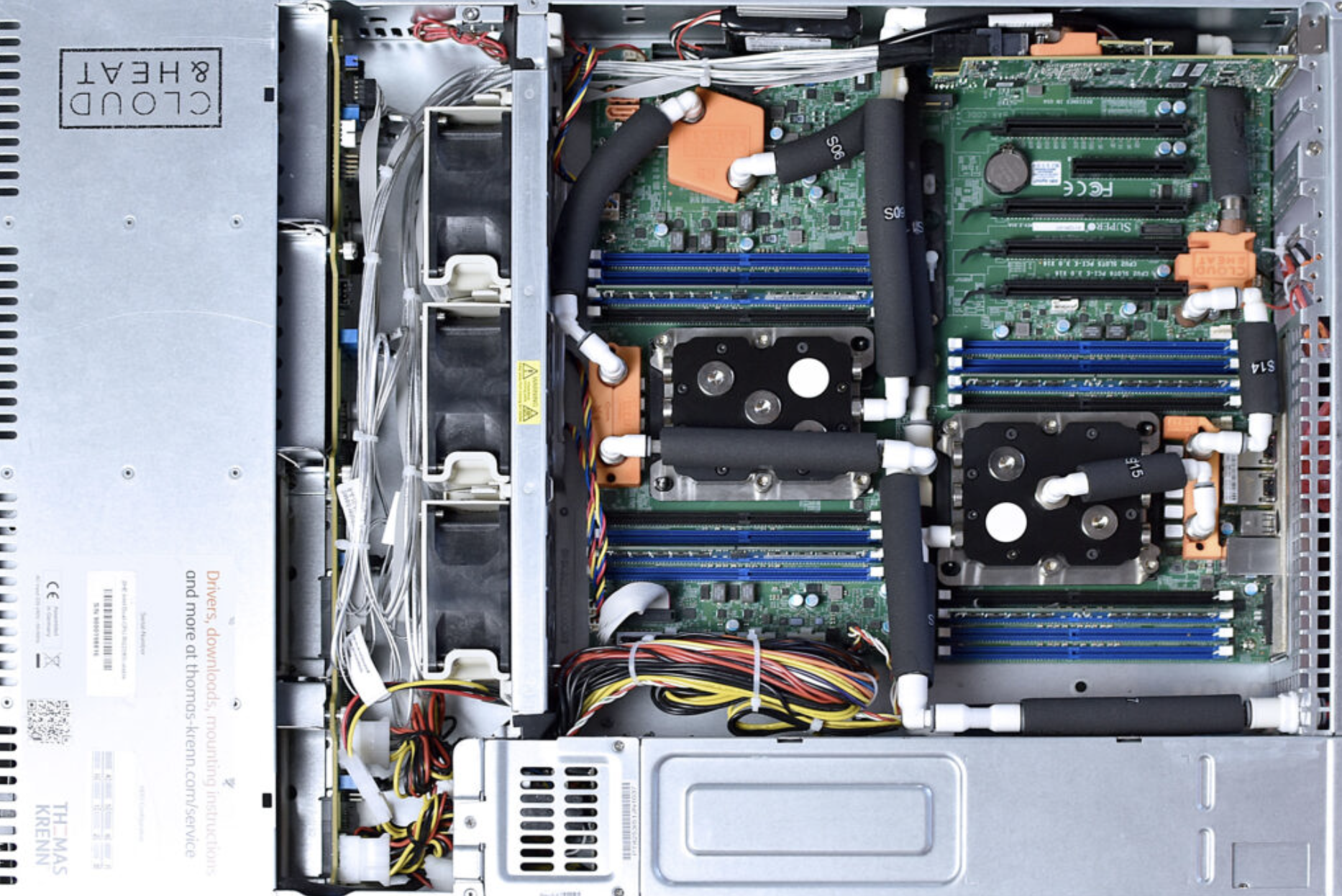

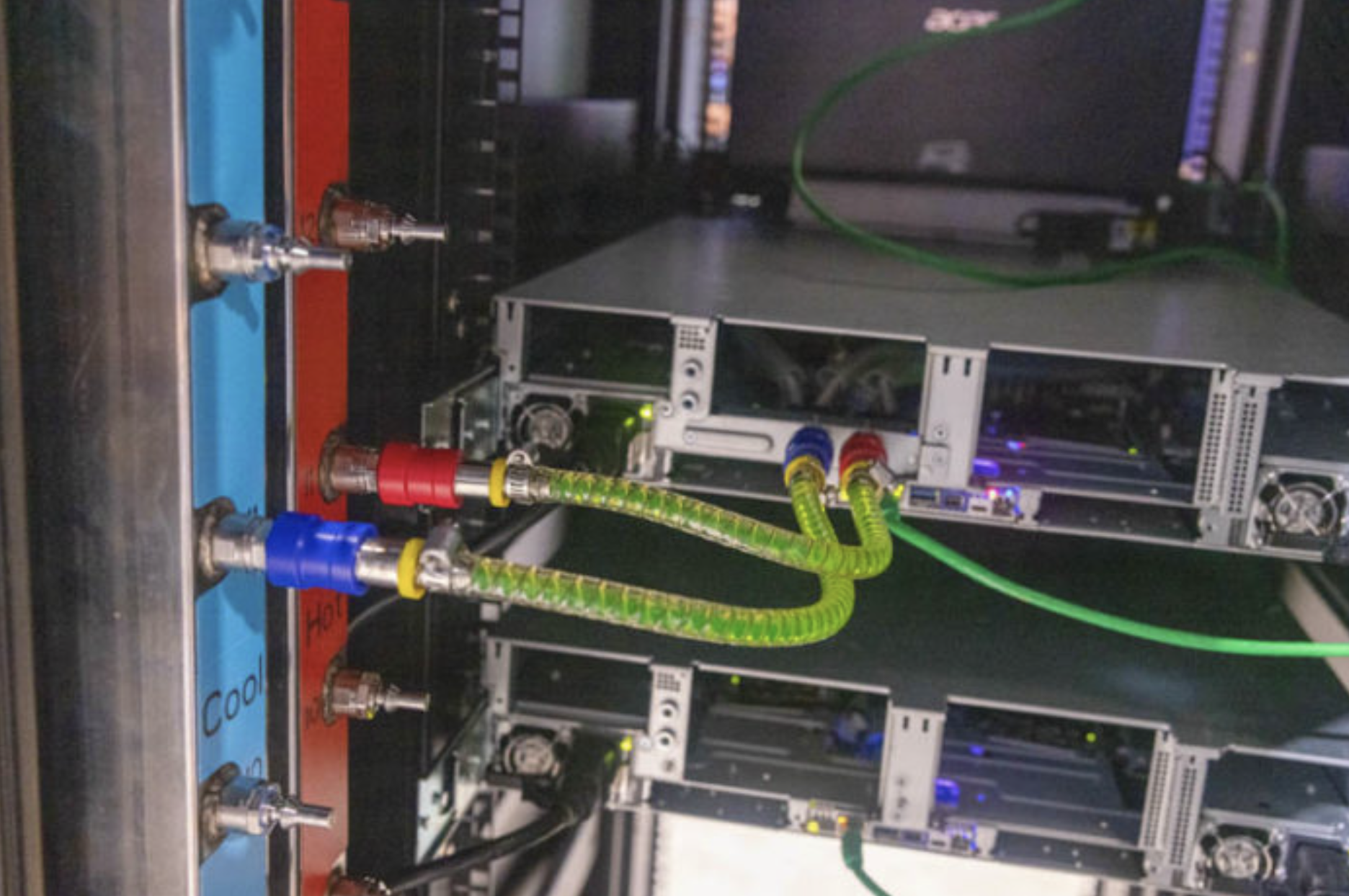

Direct to chip liquid cooling technology goes one step further and supplies the cooling liquid into the server, right up to the heat source (CPU, GPU, RAM, etc.). Heat sinks with liquid flowing through them are attached to the heat-emitting components to cool them (Figure 4). Due to the better heat transfer coefficients of liquids compared to air as explained above, the cooling liquid can reach more than 50 ºC at the server outlet, depending on the server type14. Furthermore, liquid-cooled servers benefit from the higher heat capacity and density of the liquid, in the form of a more compact design with an associated higher power density (up to 100 kW/rack)15. In the rack, the servers are connected to manifolds (Figure 5) for liquid distribution using generally dripless quick-release couplings and thus flow through in parallel.

Figure 4: Cloud&Heat Technologies, Direct to chip cooled server16

Figure 5: Manifold (left) in a rack for direct to chip liquid cooled server17

With liquid-cooled servers, generally only a few components such as hard disks, power supply units and, in some cases, RAM, emit heat into the air, which means that the use of additional air-cooling devices and their space-intensive air heat exchangers can be reduced to a minimum. Even if there are examples of cold plates for hard drives18, the extraction of heat is usually not significant enough to justify its extensive deployment and, moreover, their hot-plug and extraction can be compromised. A CDU uses pumps and valves to control the distribution of the cooling liquid to the racks, depending on their cooling requirements, and monitors the closed liquid circuit. Different topologies of circuits are used, from a circuit with its own CDU in the rack to a central CDU with coolant distribution to several rows of racks. When high liquid temperatures are employed in the circuit, free cooling can be operated all year round via a liquid circuit to the environment and energy-intensive compression chillers can be dispensed with. In addition, the high temperature level of the coolant allows the extracted heat to be recovered, for example for heating buildings, swimming pools or greenhouses, thus saving CO2 elsewhere.

Due to the still lower market availability of completely liquid-cooled servers, hybrid servers, which are partially liquid and air cooled, are currently often used. In combination with rear door coolers (Indirect fluid cooling), a completely liquid-cooled white space, controlled via a CDU, can nevertheless be operated and converted to direct to chip liquid cooling with little effort in the future.

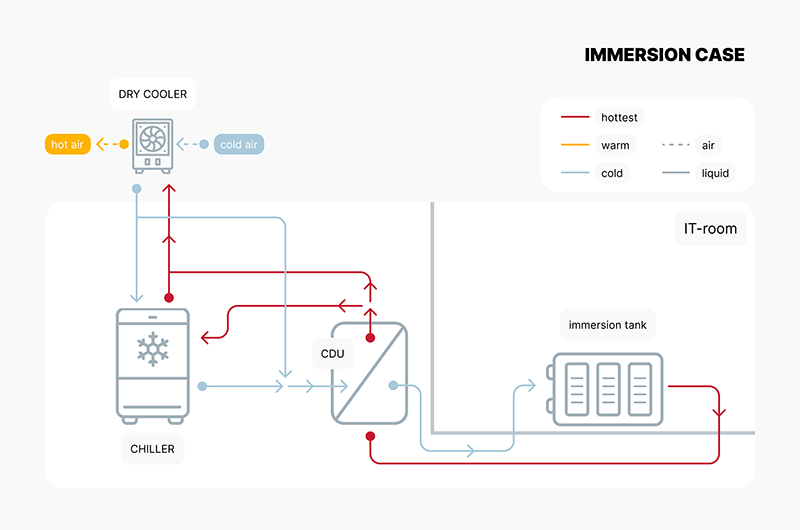

Immersion cooling

As already explained above, immersion cooling requires immersing the entire server in engineered liquids or adapted natural fluids. This liquid can either circulate actively or passively in the tank. Before the server is immersed, it needs to be modified, e.g. the fans must be generally dismantled as well as the massive heat sinks from the top of the chips to ensure proper functioning. The temperatures reached by the immersion cooling systems can be similarly high as with the cold plates, and heat extraction with immersion systems is almost 100% due to minimal heat dissipation in the air, significantly reducing the needs for artificially cooling the IT space.

Essentially, two different methods of immersion cooling can be distinguished:

Single-phase immersion cooling

In single-phase immersion cooling, the servers are immersed in a tank (Figure 6) containing a single phase of a special cooling liquid, which typically has a high thermal conductivity. The heated liquid circulates either actively using pumps or passively due to temperature differences through a heat exchanger and transfers the heat to an external circuit for cooling (free cooling or heat reuse circuit analogously as by cold plate systems). As already mentioned, this can be achieved with fluids such as mineral oil19.

Figure 6: Single-phase immersion cooling server20

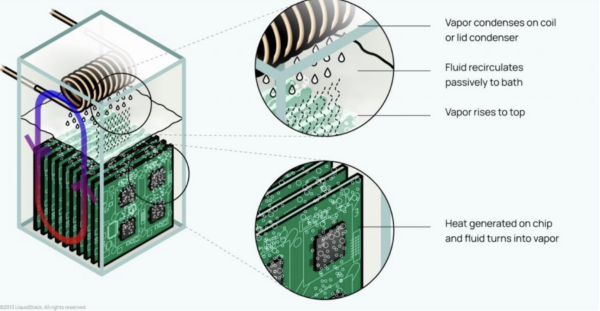

Two-phase immersion cooling

Two-phase immersion cooling goes one step further by using engineered cooling fluids that have a low boiling point and therefore partially evaporate during operation. The gaseous cooling fluid rises from the immersion bath, condenses on a condenser coil, and drips back into the immersion bath (Figure 6). This additional phase change offers even more efficient heat transfer, as the evaporation of a liquid requires a particularly large amount of heat and enables an even higher power density in the data centers21. However, two-phase immersion cooling systems sometimes require liquids that may no longer be used in Europe in the future due to the new PFAS regulation. This is either due to the high toxicity or climate-damaging potential of the liquids22, 23.

Figure 7: Passive two-phase immersion cooling24

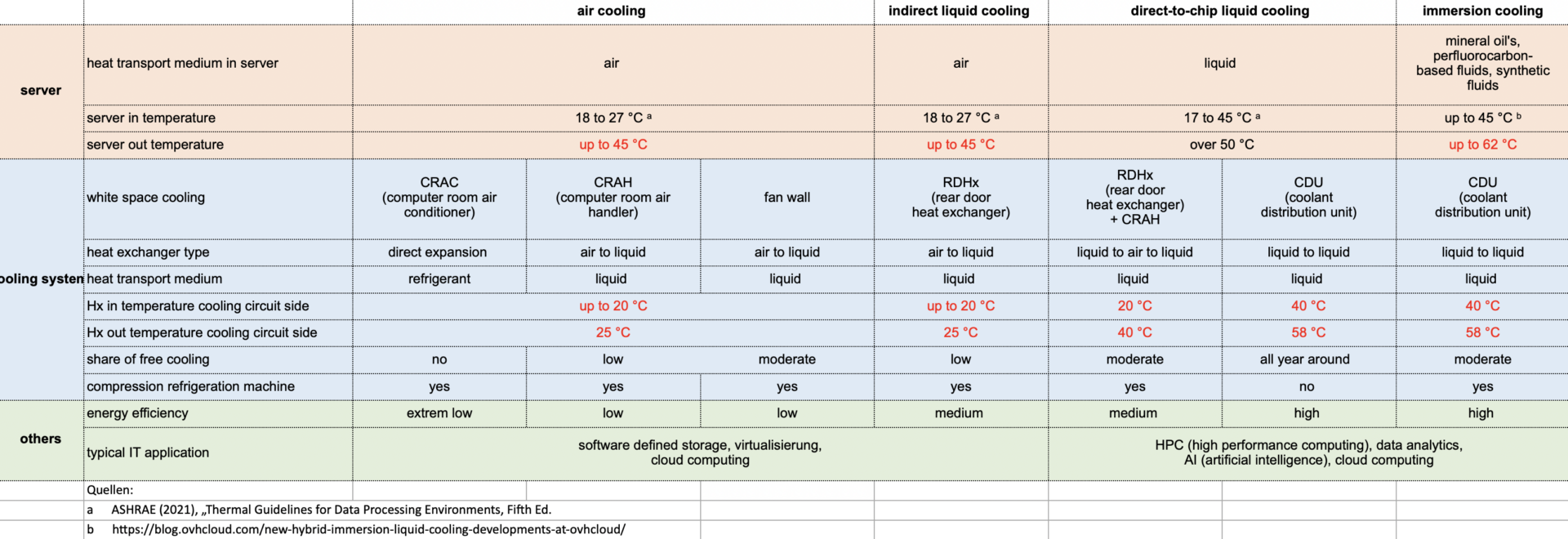

Comparison of cooling technologies

Table 3 compares the three liquid cooling technologies described with the conventional variants of air-cooled data centers in terms of their temperature level, heat transfer and energy efficiency, among other things. The following schematic diagrams illustrate the compared cooling variants.

Table 3: Comparison of liquid- and air-cooled data centers

Direct to chip liquid cooling and immersion cooling can achieve the highest temperatures in the coolant circuit, potentially enabling year-round free cooling and offering good waste heat utilization potential. Using the annualized Mechanical Load Component (MLC) of 0.13 to 0.25 defined by ASHRAE for data centers with more than 300 kW IT power25, and assuming the contribution from pumps + fans to an MLC of maximum 0.05, the cost savings of reaching higher temperatures allowing free-cooling (or heat reuse) can be phenomenal (a reduction to up to a fourth of the energy needed for cooling). These savings can offset the higher costs that the liquid-cooled servers may incur.

The technology comparison then looks at system differences in the white space’s structure, operation, maintenance, and servicing.

All three liquid cooling technologies do not generally need an enclosure and ducts for air routing in the white space, as rather little warm air is generated away from the rack.

Compared to air-cooled systems, direct to chip liquid cooling and immersion cooling are also characterized by a lower space requirement, resulting from the higher power density of the servers and the elimination of air-to-air heat exchangers thanks to compact liquid-to-liquid heat exchangers.

Immersion cooling is theoretically a simple technology that saves on the development of component-specific heat sinks and often chiller investment. On the other side, however, even though maintenance should be theoretically lower, maintenance actions can be time-consuming, as the servers have to be drained after they have been removed from the cooling fluid. Furthermore, the cooling fluids used in immersion cooling can be costly and challenging, and some of them will be affected by the newest P-FAS regulations. Moreover, there is a myriad of different fluids which complicates compatibility within systems and warranty procedures. Like many new technologies, it will need to overcome its first hurdles. Standardization, natural refrigerants and potentially robotized maintenance will improve its deployment at scale.

Cooling requirements of IT-application

Liquid-cooled servers are often used in environments where high power density is required and conventional air cooling systems are not sufficient. As shown in this article, there is a particular advantage of liquid cooling in computing areas requiring high power densities26. While until recently liquid-cooled data centers were more common in research facilities in supercomputers, the number is also increasing in commercial cloud computing data centers, in particular the ones dedicated to train AI Large Language Models27. However, this technology is also suitable for other applications. It depends specifically on the technology installed and the conditions of the location. For data centers in temperate climates, liquid cooling can eliminate the need for a compression chiller28. This reduces power consumption and significantly lowers initial capex29.

Conclusion

In summary, it can be said that liquid cooling in data centers is suitable for all IT applications, but it has mainly been deployed for applications with high computing power, where it seems superior to air-cooled systems due to its higher heat capture capacity per surface unit.

Liquid cooling also performs better in terms of sustainability due to its higher energy efficiency, especially cold plates and immersion cooling using higher temperature levels and hence a higher free cooling ratio. The elevated temperature level also offers the potential for waste heat utilization, with associated energy and CO2 savings elsewhere30.

All the liquid cooling variants presented are operated with a cooling circuit up to the rack. Due to the usage of a common infrastructure for liquid cooling to and in the white space (piping, CDUs, heat exchangers, etc.), air-cooled (using liquid indirectly), liquid-cooled cold plates and immersion-cooled servers can be installed and operated parallelly. At the same time, it is possible to react cost-effectively to the growing application requirements of AI and data analysis by replacing racks or servers without having to completely modify the existing facility infrastructure in the data center. Future data centers will have coexistent systems to face the different computing needs. To have an AI-ready data center means that this has been considered in the design and that the data center can host high-density servers while hosting traditional less dense IT equipment (source AQC).

Exciting innovations are being explored in the field of liquid cooling for IT technology and data centers, which could further improve the efficiency and reliability of cooling technology. These innovations will be explained in a future article.

[1] https://www.datacenterdynamics.com/en/analysis/an-introduction-to-liquid-cooling-in-the-data-center/

[2] https://www.pcmag.com/how-to/pc-cooling-101-how-to-buy-the-right-air-or-water-cooler-for-your-desktop

[3] https://www.computerhistory.org/revolution/mainframe-computers/7/161

[4] https://www.datacenterdynamics.com/en/opinions/ten-years-liquid-cooling/

[5] https://www.esmagazine.com/articles/101454-free-cooling-in-buildings-opportunities-to-drive-significant-savings#:~:text=The%20third%20mode%2C%20100%25%20free,with%20the%20compressors%20turned%20off

[6] https://www.opencompute.org/documents/guidelines-for-using-propylene-glycol-based-heat-transfer-fluids-in-single-phase-cold-plate-based-liquid-cooled-racks-final-pdf

[7] https://submer.com/blog/what-is-immersion-cooling/

[8] https://www.sciencedirect.com/science/article/am/pii/S0017931019336002

[9] https://www.gigabyte.com/Enterprise/GPU-Server/G190-H44-rev-531

[10] ASHRAE (2021), „Thermal Guidelines for Data Processing Environments, Fifth Ed.

[11] https://schroff.nvent.com/en-in/solutions/schroff/applications/new-high-performance-rear-door-cooling-unit

[12] https://www.ashrae.org/file%20library/technical%20resources/bookstore/supplemental%20files/referencecard_2021thermalguidelines.pdf

[13] https://www.stulz.com/de-de/produkte/detail/cyberrack/

[14] https://www.carel.com/blog/-/blogs/liquid-cooling-for-data-centres-from-niche-to-mainstream-?utm_source=twitter&utm_medium=social&utm_campaign=tw_pub_org_20220324_blog_liquid_cooling_for_data_centres_from_niche_to_mainstream_

https://eepower.com/technical-articles/smart-water-cooling-for-servers-and-data-centers/

[15] https://www.supermicro.com/solutions/Solution-Brief_Supermicro_Liquid_Cooling_Solution_Guide.pdf

https://www.servethehome.com/supermicro-custom-liquid-cooling-rack-a-look-at-the-cooling-distribution/

https://www.theregister.com/2022/11/08/colovore_liquidcooled_datacenter/

[16] https://www.cloudandheat.com/referenz/thomaskrenn/

[17] https://www.servethehome.com/qct-liquid-cooled-rack-intel-sapphire-rapids-bake-off/

[18] https://koolance.com/hd-60-hard-drive-water-block

[19] https://blog.ovhcloud.com/new-hybrid-immersion-liquid-cooling-developments-at-ovhcloud/

[20] https://www.gigabyte.com/Industry-Solutions/submer-single-phase-immersion-cooling

[21] https://www.gigabyte.com/de/Press/News/1972

[22] https://news.3m.com/2022-12-20-3M-to-Exit-PFAS-Manufacturing-by-the-End-of-2025

[23] https://www.umweltbundesamt.de/eu-beschraenkt-verwendung-weiterer-pfas

[24] https://liquidstack.com/2-phase-immersion-cooling

[25] https://www.ashrae.org/file%20library/technical%20resources/standards%20and%20guidelines/standards%20addenda/90_4_2022_g_20240131.pdf

[26] https://blog.qct.io/de/wasserkuehlung-fuer-ki-und-supercomputing-was-sind-die-groessten-herausforderungen/

[27] https://www.supermicro.com/en/solutions/liquid-cooling

[28] The usage of a compression chiller. The choice to install it or not would be based on maximal historical temperatures, and hardware types. In general, a very cold climate is needed and/or evaporative cooling to be able to free-cool 100% of the hardware (it is quite uncommon to have a data center with 100% liquid-cooled hardware).

[29] https://www.vactis.it/direkte-freie-kuehlung-und-wasserkuehlung-welche-methode-ist-fuer-tlc-raeume-und-rechenzentren-zu-waehlen/

[30] Reference to our blog article: Who called it a waste?